Computer programs that crunch data from arrest reports, court records, even social media accounts, and then spit out predictions about future crimes to stop them before they happen, promise to have policing down to a science. But hidden in the blind spots of these data-crunching algorithms lay numerous civil liberties concerns.

Now that “predictive policing” tech is no longer just fodder for Tom Cruise sci-fi thrillers, but increasingly sophisticated and commonplace in police departments around the country,we must dig beneath the veneer of objectivity that we reflexively assign to data and fully examine the biases that our criminal justice system bakes into the numbers.

Old biases of the past are both replicated and made invisible under a false guise of impartiality.

Regardless of the developer, predictive policing programs use data collected by people, who have their own internal biases, to make forecasts about the future. The end result is that those biases are projected into the future, but “tech-washed” as impartial, objective data.

Here’s an example of how this plays out: police concentrate enforcement efforts in communities of color, leading to an overabundance of data about crime in those places. That information is then fed into computer programs as “historical crime data” and used to make predictions about who will commit crimes in the future and where those crimes are likely to take place. Unsurprisingly, algorithms identify communities of color as “hotspots” for crime and direct patrol cars accordingly.

Old biases of the past are both replicated and made invisible under a false guise of impartiality. Meanwhile, crimes that occur in other neighborhoods, but that are not reported or investigated by police, do not become data points for future forecasts.

A group of researchers from the University of Cincinnati’s Institute of Crime Science (ICS) recently gave a presentation to New Mexico legislators on the Criminal Justice Reform subcommittee touting the benefits of their predictive policing technology. While some police departments, like Albuquerque and Rio Rancho, already possess some form of predictive tech, legislators are considering purchasing a number of tools, including those created by lCS, to be implemented across various stages of the entire state’s criminal justice system.

We must collect data with an eye toward rooting out abuse of power, inequalities, and injustice.

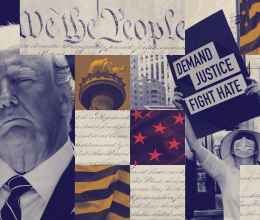

Before we roll out a statewide program that will impact the everyday lives of New Mexicans, we must question how the data will be used, how transparency will be ensured, and how people’s constitutional rights will be protected.

Data can be a force for good, but only if it does not replicate or reinforce historical and contemporary harms. We must collect data with an eye toward rooting out abuse of power, inequalities, and injustice, so that we can address those problems and make our communities stronger and safer.

This, however, is not always a priority for law enforcement. APD — the largest police department in our state — has neglected to collect adequate data about use-of-force instances and complaints against officers, even though a 2014 settlement agreement with the Department of Justice mandated that it do so. Without consistent data on officer conduct, the department’s Early Intervention System (EIS) can not properly flag the need for further training, counseling, or any other corrective action.

Ensuring data does not reinforce past harms also requires seeking out biases and blind spots by opening up the systems we employ and making them fully transparent. This means, no matter which predictive policing model New Mexico uses, all data inputs must be revealed to the public. Vague references to “historical crime data,” with no mention of specifics, will not do.

If the ICS model, or any other program, mines social media accounts looking for contacts of people with prior convictions, or sorts through video surveillance footage tagging certain physical gestures as suspicious to make predictions about future criminal activity, the public has a right to know. They also have a right to know how predictive programs calculate risk and what measures legislators will take to protect their constitutional rights.

Because predictive policing programs, like the one developed by the ICS, boast the ability to identify linkages between people and create “degrees of separation diagrams” for criminal networks, they have the potential to incorrectly flag people as gang members and expose them to increased scrutiny without their knowledge. Any technology that is implemented in New Mexico must not trample on people’s Fourth Amendment rights to be free from searches and arrest without probable cause — and computer-driven hunches do not count.

How are the people surrounded by predictive tech’s targets not to get caught up in the machine?

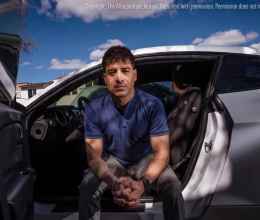

ICS researchers claim their model actually improves trust between law enforcement and community members by targeting only those people who commit crimes, rather than surveilling whole communities. But people live in neighborhoods, with loved ones, family, and friends, as well as alongside neighbors. How are the people surrounded by predictive tech’s targets not to get caught up in the machine?

And do the people with prior arrests and convictions even have a chance at turning their lives around if police are waiting outside their doors?

That depends on how police react to the program’s predictions. If they utilize them to pinpoint where social services are needed, as the ICS model suggests is possible, then perhaps these programs could help at-risk individuals by more effectively allocating educational opportunities, job training, and health services.

Another, more frightening possibility, is that this technology will be used to further oppress already marginalized people, and in our trust of data, we’ll be blind to that injustice.